AI Solutions

“The number of transistors incorporated in a chip will approximately double every 24 months”

Fueling the AI Revolution through Exponential Growth in Computing Power

Since its inception in the 1960s, Moore’s Law has remained a guiding principle in the world of technology. This consistent doubling of transistors on a chip has led to significant advancements in computing capabilities while driving down the cost of devices.

The implications of Moore’s Law are profound. With each doubling of computing power, we witness a surge in demand. In the last decade the demand is fueled by the need for better computing hardware to support increasingly complex AI systems.

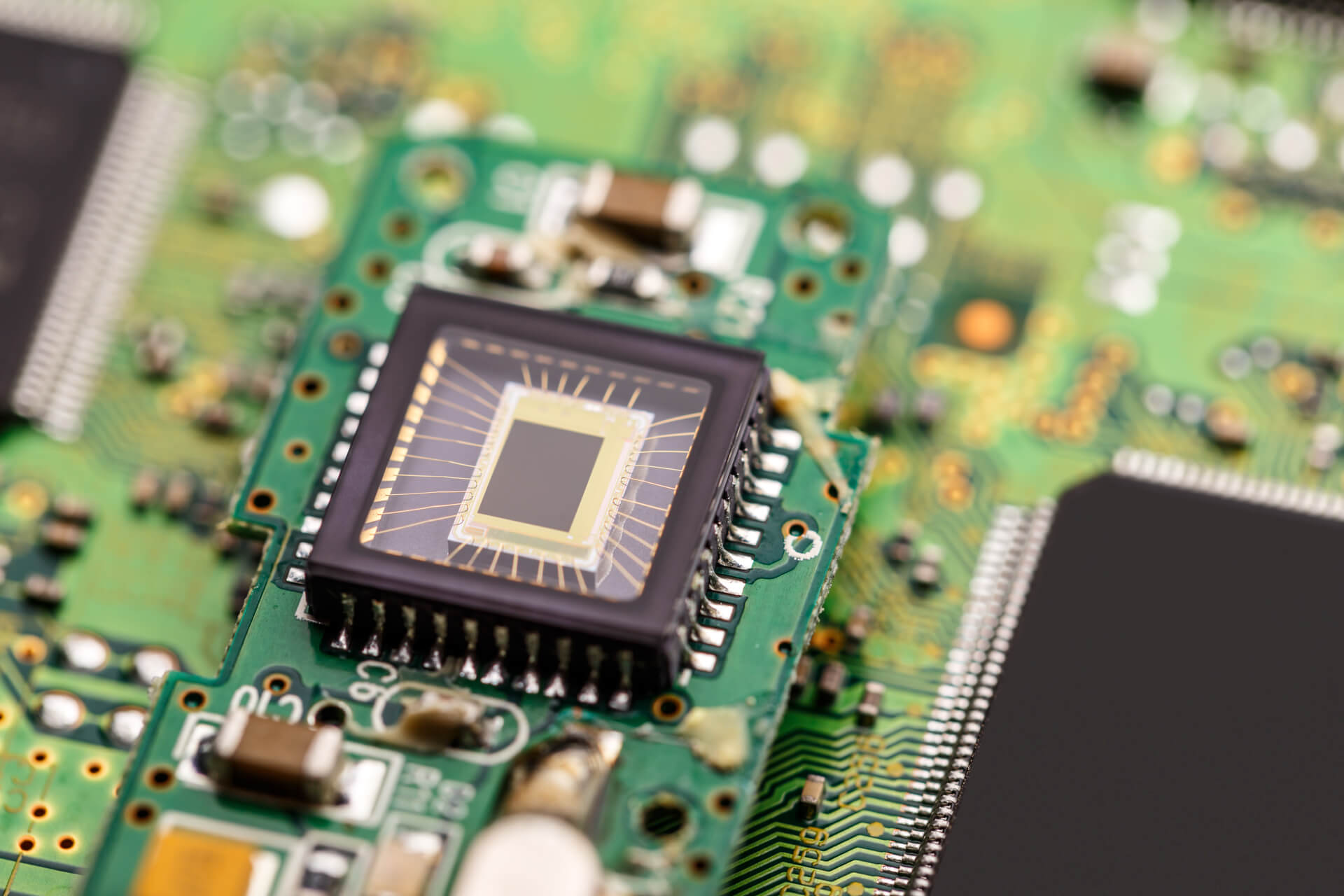

Semiconductors, the Backbone of AI's Leap Forward

Many of the recent advancements in AI can be attributed to the improvements in semiconductor technology. These advancements allow AI systems to be trained with larger datasets, leading to more accurate and effective results.

Several types of semiconductors play a crucial role in running AI systems, including CPU chips, GPU chips, FPGA (Field-Programmable Gate Array) chips, and ASIC (Application-Specific Integrated Circuit) chips. Each type of semiconductor offers unique advantages and is tailored to specific AI applications.

The Role of Diverse Technologies

GPU chips, for example, excel in parallel processing tasks, making them ideal for deep learning algorithms commonly used in AI applications. FPGA chips offer flexibility and reconfigurability, allowing for rapid prototyping and customization of AI models. ASIC chips are designed for specific tasks, offering high performance and energy efficiency for specialized AI applications.

From autonomous vehicles to healthcare diagnostics, AI is revolutionizing industries across the board, powered by a diverse range of semiconductor technologies. However, the progression of AI is heavily reliant on the availability of computing power. As AI systems grow in complexity, the demand for computing resources continues to escalate.